(From phys.org) Less than two years after shocking the science world with the discovery of…

SwarmTouch: A tactile interaction strategy for human-swarm communication

(From phys.org)

Researchers at the Skolkovo Institute of Science and Technology (Skoltech) in Russia have recently introduced a new strategy to enhance interactions between humans and robotic swarms, called SwarmTouch. This strategy, presented in a paper pre-published on arXiv, allows a human operator to communicate with a swarm of nano-quadrotor drones and guide their formation, while receiving tactile feedback in the form of vibrations.

“We are working in the field of swarm of drones and my previous research in the field of haptics was very helpful in introducing a new frontier of tactile human-swarm interactions,” Dzmitry Tsetserukou, Professor at Skoltech and head of Intelligent Space Robotics laboratory, told TechXplore. “During our experiments with the swarm, however, we understood that current interfaces are too unfriendly and difficult to operate.”

While conducting research investigating strategies for human-swarm interaction, Tsetserukou and his colleagues realised that there are currently no available interfaces that allow human operators to easily deploy a swarm of robots and control its movements in real time. At the moment, most swarms simply follow predefined trajectories, which have been set out by researchers before the robots start operating.

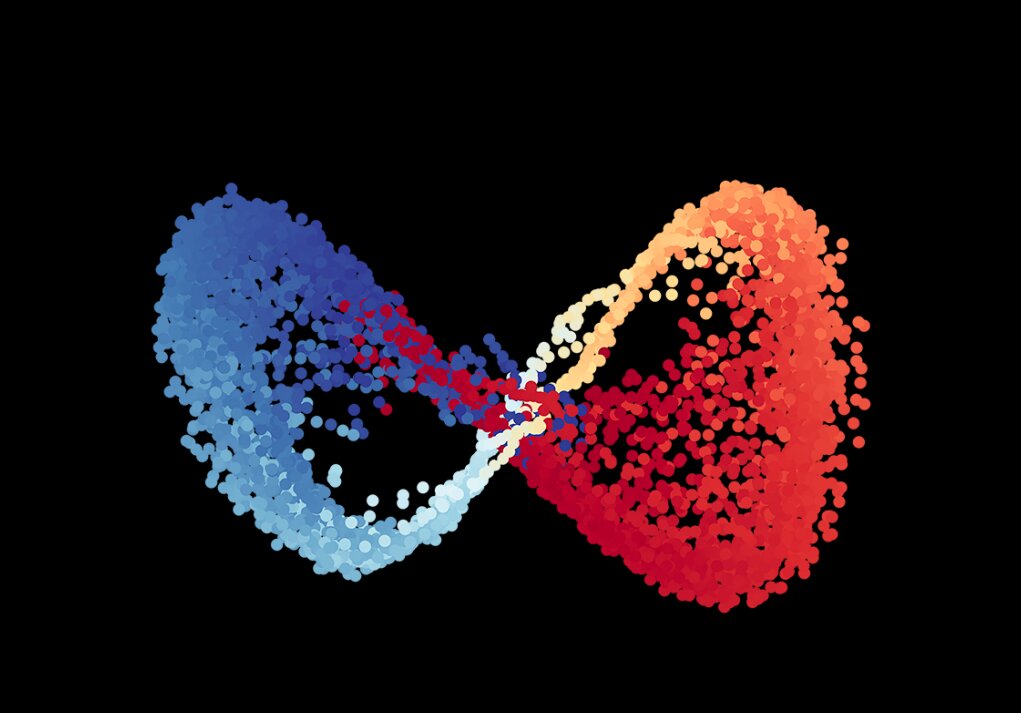

The human-swarm interaction strategy proposed by the researchers, on the other hand, allows a human user to guide the movements of a swarm of nano-quadrotor robots directly. It does this by considering the velocity of the user’s hand and changing the swarm’s formation shape or dynamics accordingly, using simulated impedance interlinks between the robots to produce behaviors that resemble those of swarms occurring in nature.

The system devised by the researchers includes a wearable tactile display that delivers patterns of vibration onto a user’s fingers in order to inform him/her of current swarm dynamics (i.e., if the swarm is expanding or shrinking). These vibration patterns allow human users to change the swarm dynamics so that the swarm can avoid obstacles simply by moving their hands at different speeds or in different directions.

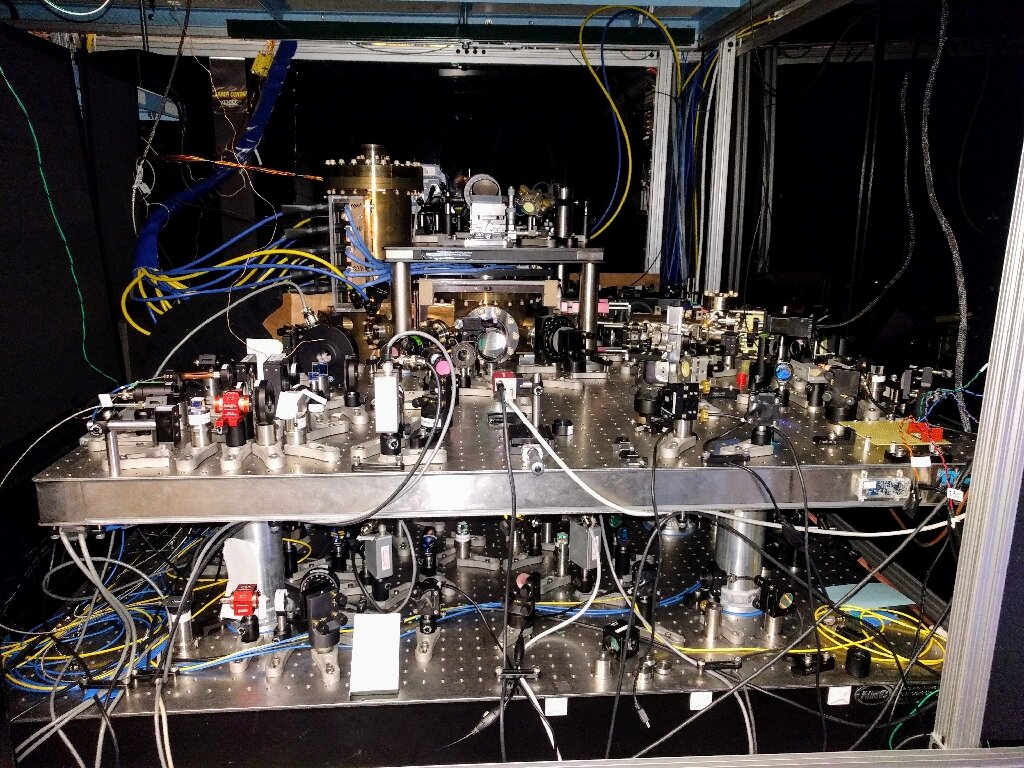

The system detects the position of the user’s hand using a highly precise motion capturing system called Vicon Vantage V5. In addition, the human operator and individual robots in the swarm are connected through impedance interlinks.

“These links behave like springs-dampers,” Tsetserukou explained. “They prevent drones from flying close to the operator and to each other and from starting or stopping abruptly. Our strategy considerably improves the safety of human-swarm interactions and makes the behaviors of the swarm similar to those of real biological systems (e.g. bee swarms).”

The key advantage of the human-swarm interaction strategy devised by Tsetserukou and his colleagues is that it allows users to experience the motion of a robot swarm directly through their fingertips. It also allows operators to change swarm dynamics in real-time, allowing the robots to navigate cluttered and complex environments, such as urban centres filled with skyscrapers or other obstacles.

Preliminary tests evaluating this new tactile interaction strategy revealed that users are able to understand what the vibrations on their fingertips mean the majority of the time. Most of the participants who took part in these tests felt that the tactile sensation improved their ability to guide the drones, while also making their communication with the swarm more interactive.

In the future, SwarmTouch, the strategy developed by Tsetserukou and his colleagues, could be used to train swarms to navigate in warehouses, deliver goods within urban environments and even inspect bridges or other infrastructures. The researchers will soon be presenting another approach, called CloakSwarm, at the ACM Siggraph Asia 2019 conference.

They are also working on two additional drone-human interaction strategies, SlingDrone and WiredSwarm, which will be demonstrated at the ACM VRST 2019 conference. SlingDrone, the first of these strategies, is a mixed reality paradigm that allows users to operate drones using a pointing controller in an interactive way, producing a slingshot-like motion.

“This approach is somewhat similar to the popular mobile game Angry Birds, but with users pulling a real drone with a rope instead of on a touch screen, in order to navigate its ballistic trajectory in virtual reality,” Tsetserukou explained. “SlingDrone allows you to point a virtual drone in the direction you want it to fly in and at the same time a real drone will fly to the target position and bring you the object you wish to get hold of. WiredSwarm, on the other hand, is a swarm of drones that are attached to the user’s fingers with leashes, which can provide high-fidelity haptic feedback to a VR user. We call this new type of interface the first flying wearable haptic interface.”